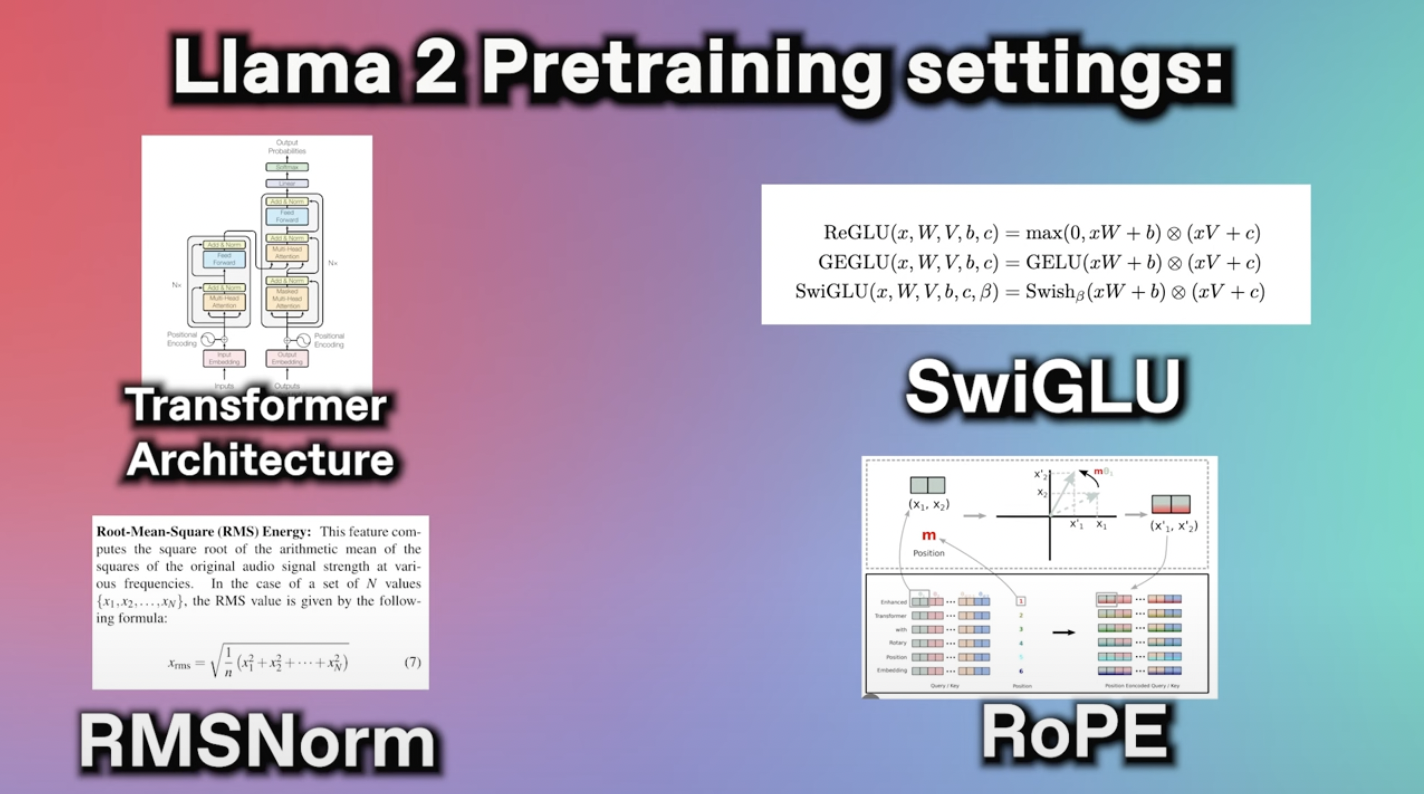

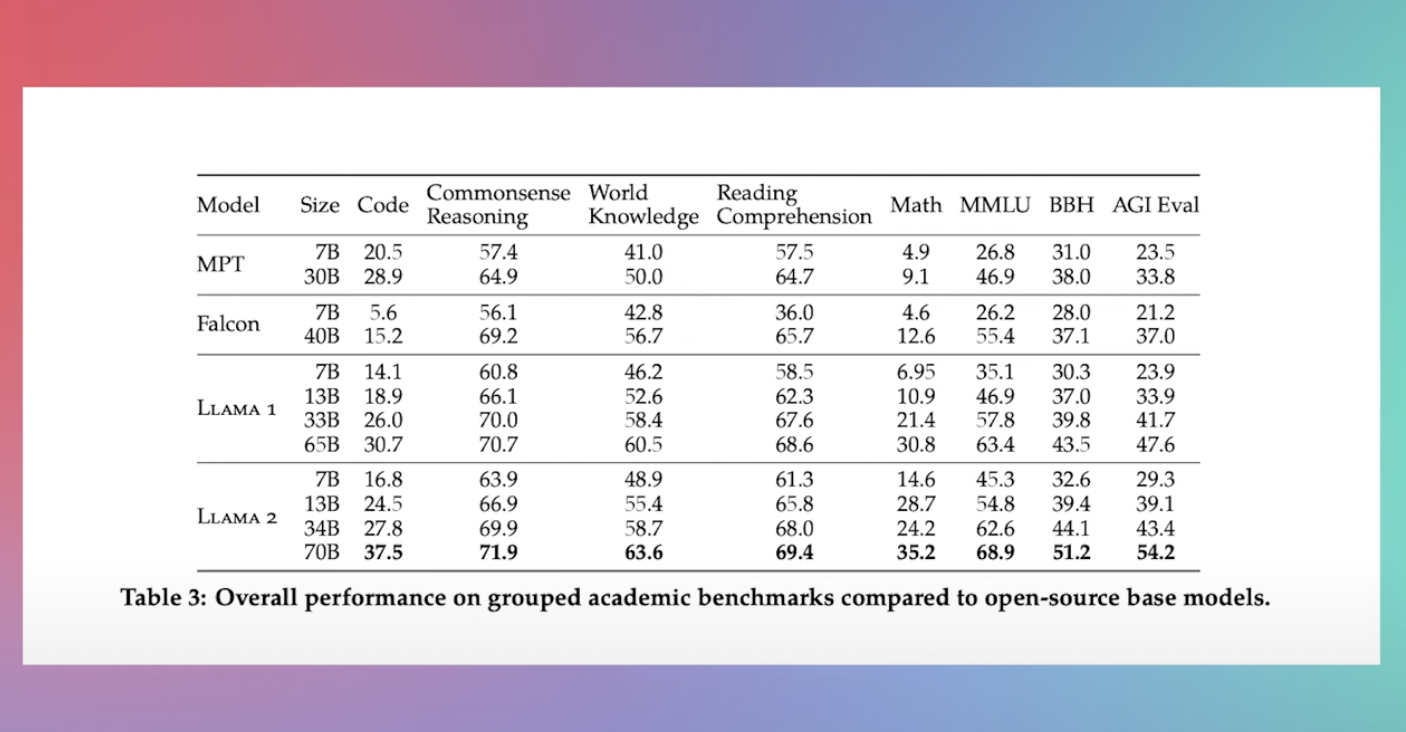

The LLaMA-2 paper describes the architecture in good detail to help data scientists recreate fine. Alright the video above goes over the architecture of Llama 2 a comparison of Llama-2 and Llama-1. The abstract from the paper is the following In this work we develop and release Llama 2 a collection of. We introduce LLaMA a collection of foundation language models ranging from 7B to. We release Code Llama a family of large language models for code based on Llama 2 providing state-of. Model Architecture Llama 2 is an auto-regressive language model that uses an optimized transformer architecture. Deals of the DayRead Ratings Reviews..

This repo contains GPTQ model files for Meta Llama 2s Llama 2 7B Chat Multiple GPTQ parameter permutations are provided. Llama 2 encompasses a range of generative text models both pretrained and fine-tuned with sizes from 7 billion to 70 billion parameters. . GPTQ falls under the PTQ category making it a compelling choice for massive models. Notebook with the Llama 2 13B GPTQ model Anyway it is true that the..

This repo contains GPTQ model files for Meta Llama 2s Llama 2 7B Chat Multiple GPTQ parameter permutations are provided. Llama 2 encompasses a range of generative text models both pretrained and fine-tuned with sizes from 7 billion to 70 billion parameters. . GPTQ falls under the PTQ category making it a compelling choice for massive models. Notebook with the Llama 2 13B GPTQ model Anyway it is true that the..

Llama-2 with 32k Context Requirements pip install --upgrade pip pip install transformers4332 sentencepiece accelerate LLama Long Additional. We have a broad range of supporters around the world who believe in our open approach to todays AI companies that have given early feedback and are excited to build with Llama 2 cloud. Llama 2 pretrained models are trained on 2 trillion tokens and have double the context length than Llama 1 Its fine-tuned models have been trained on over 1 million human annotations. Meta introduces LLAMA 2 Long Context windows of up to 32768 tokens The 70B variant can already surpass gpt-35-turbo-16ks overall performance on a suite of long-context. Llama 2 Long is an extension of Llama 2 an open-source AI model that Meta released in the summer which can learn from a variety of data sources and perform multiple tasks..

Komentar